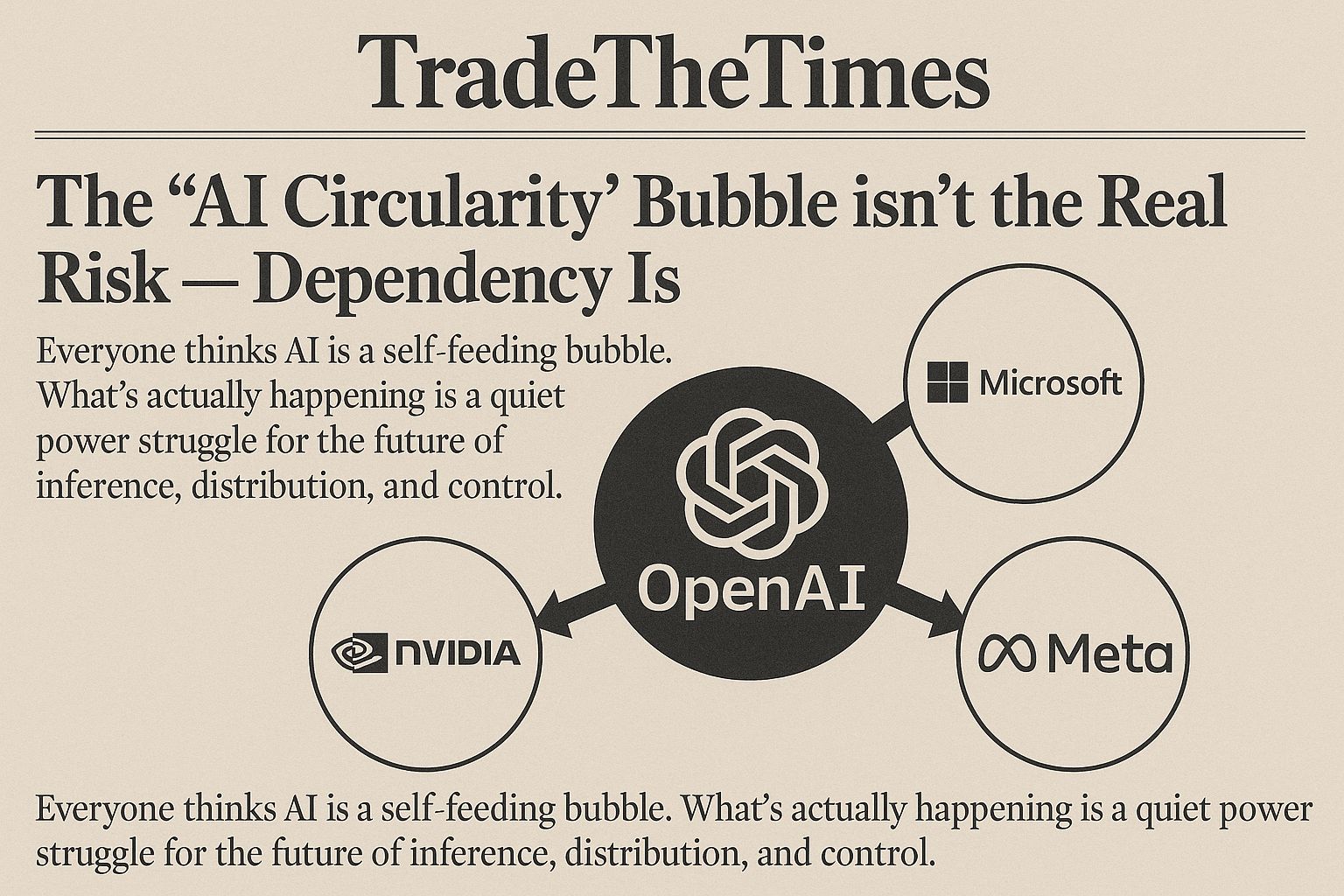

Open X lately and you’ll see the same story everywhere. Charts showing “AI circularity.”

Posts claiming the whole sector is a financial loop: NVIDIA finances CoreWeave → CoreWeave sells compute to OpenAI → OpenAI signs a massive $250B RPO with Microsoft → Microsoft records multi-billion dollar paper losses → NVIDIA reinvests into the same ecosystem → Oracle appears with OpenAI-heavy cloud contracts that look more like structured finance than cloud services.

To many observers it looks like a capital merry-go-round. They see the loop and conclude: bubble. That instinct is understandable — but it misses the truth. This is not fake growth or financial engineering. What we are seeing is something far more strategic: a race to lock in power before the economics of AI shift.

The circularity is just the surface pattern of a much deeper battle. The giants of tech are not chasing the same goal. Each company is trying to win a different part of the next AI platform — and investing aggressively to secure its future position before the rules change.

The Four Different Games Being Played

Let’s simplify what each major player is actually trying to become:

NVIDIA: Defend the GPU Era

NVIDIA’s dominance today stems from training large AI models. But the real money in AI long-term is inference — the day-to-day use of models once training is done. Inference is the part that runs millions or billions of times per day. That is the “volume business” of AI.

NVIDIA’s problem is clear:

As inference gets cheaper and smaller, GPUs may lose relevance to specialized chips and edge devices. This is why NVIDIA’s behavior has become more aggressive:

• Financing cloud providers

• Backstopping unsold capacity at CoreWeave

• Locking customers deeper into the CUDA ecosystem

• Investing in OpenAI contingent on deploying massive NVIDIA systems

• Even renting and financing capacity from the same clouds they supply

They aren’t being generous. They’re defending the GPU inference future before it slips away.

Shoppers are adding to cart for the holidays

Over the next year, Roku predicts that 100% of the streaming audience will see ads. For growth marketers in 2026, CTV will remain an important “safe space” as AI creates widespread disruption in the search and social channels. Plus, easier access to self-serve CTV ad buying tools and targeting options will lead to a surge in locally-targeted streaming campaigns.

Read our guide to find out why growth marketers should make sure CTV is part of their 2026 media mix.

Microsoft: Build the AI Operating System

Microsoft is not trying to sell the best model. They are trying to become the operating system of AI. This became obvious in Satya Nadella’s recent podcast discussions. He did not talk about AI like it’s a software product. He spoke like someone designing a digital power grid.

He described:

• Multi-region petabit networks that reroute workloads across states in real time

• Modular data centers that can pivot between chip generations without locking into any single vendor

• Data center architectures built around memory locality and model parallelism

It sounded less like SaaS and more like infrastructure for the next decade. Then came the key insight: agents. Not assistants. Not chatbots. Satya described agents as actual users of Microsoft software.

An agent opens Excel.

Pulls data.

Writes files back to SharePoint.

Triggers Power Automate workflows.

From Microsoft’s view, these agents generate the same telemetry, licenses, and “gravity” as human enterprise users did under Windows. This explains everything: Microsoft doesn’t care about OpenAI’s revenue. They care about owning the distribution layer beneath AI usage. Windows once controlled PC software distribution. Microsoft now wants to control AI workflow distribution.

That is why Microsoft tolerates enormous paper losses on its OpenAI equity stake. They are not paying for profits. They are paying for gravitational pull.

Google: Win the Inference Economy

Ironically, while many commentators say Google is “behind” in AI, Google is arguably the best positioned for the phase that actually matters financially. The market obsesses over training and big flashy models. But the real economic engine of AI is inference.

And inference is evolving quickly:

• Smaller models

• Domain-specific workloads

• Low-precision compute like FP4 instead of FP16

• More queries running on-device or near the edge

This world favors cost efficiency and vertical integration, not generic GPUs. Google has been building exactly this future. Their exploding capex isn’t panic spending — it’s scale-out of a vertical silicon stack: TPU v5e, v5p, v5lite — chips optimized for low-cost inference at massive scale. Google is creating the world’s only production-grade inference ASIC supply chain. Training gets headlines. Inference prints money.

Google Cloud backlog growing 82% year-over-year tells us the demand is real — and contractually locked, not speculative.

Meta: Scale Over Infrastructure

Meta’s strategy is simpler: They own billions of eyes and hands on social platforms. They are pushing AI into those surfaces to drive usage scale first, monetization later. They are trying to brute-force relevance through sheer distribution power.

The Real Risk: Dependency on OpenAI

Enterprise AI demand is not fake.

• AWS growth is stabilizing and re-accelerating

• Azure’s AI revenue contribution is clearly growing

• Google Cloud is scaling meaningfully

The hyperscalers are fine. The danger lies elsewhere. Downstream compute demand from OpenAI is propping up entire sectors of the market.

Consider this:

• Oracle: ~2/3 of cloud RPO tied to OpenAI

• CoreWeave: ~40% revenue exposure to OpenAI + NVIDIA owns >5%

• Microsoft: absorbing billion-dollar equity swings because its OAI stake behaves like a biotech option. Microsoft, Amazon,and Google can absorb that risk. The neo clouds cannot. Their entire business models depend on OpenAI growing fast enough to justify the data center buildouts NVIDIA helped finance.

If OpenAI’s monetization slips even modestly, Hyperscalers shrug. Neo clouds get squeezed.

Small Models Shift the Math

Then comes the rise of small language models (SLMs). SLMs pull marginal inference:

• Off massive cloud GPU clusters

• Onto devices or low-power ASICs

Cloud growth continues overall — but erosion appears at the edges. And it hits unevenly.

• GPU-centric architectures suffer most

• Google benefits from inference ASICs

• Microsoft wins through agent distribution

• Meta monetizes through usage scale

• Neo clouds feel pressure from utilization declines

So Is AI a Bubble?

No. What people are reacting to is the uncomfortable sight of infrastructure being built ahead of monetization. But that is what every platform shift looks like:

The internet

Smartphones

Cloud computing

All required massive front-loaded capex and delivered back-loaded returns.

The Real Red Flag

Hype is not the problem. Dependency is.

OpenAI carries a massive share of today’s infrastructure economics on its back. If monetization scales, the cycle looks visionary in hindsight. If it slows, the cracks appear at the edges — among businesses built entirely around a single customer.

Final Thought

Circularity isn’t the danger signal. Dependency is.

And the companies that understand this — especially Microsoft, Google, and Amazon — are positioning themselves for the next phase of AI, not reacting when it finally arrives.